AI & Digital Assets

May 9, 2025: AI & Digital Assets

- OCC: Banks Can Buy and Sell Their Customers’ Crypto Assets Held in Custody

- $45 Million Stolen from Coinbase Users in the Last Week

- Associations Urge Regulators to Remove Barriers for Banks Engaged in Digital Assets

- Musk’s xAI Joins TWG Global, Palantir for AI Push in Financial Sector

OCC: Banks Can Buy and Sell Their Customers’ Crypto Assets Held in Custody

Jessee Hamilton, CoinDesk

A new policy directive from the U.S. regulator of national banks says the institutions can also outsource crypto custody and execution to outside parties.

A new policy directive from the U.S. regulator of national banks says the institutions can also outsource crypto custody and execution to outside parties.

What to know:

- The Office of the Comptroller of the Currency is further letting bankers off the crypto leash, clarifying through letters Wednesday that banks can buy and sell their customers’ crypto assets.

- The banks can also use third-party servicers on crypto work, the OCC said.

- This follows earlier OCC guidance — matched by the Federal Deposit Insurance Corp. and the Federal Reserve — that reversed a previous policy restricting bankers to getting signoffs from the regulator before they could move on crypto matters.

The U.S. Office of the Comptroller of the Currency, which regulates national banks, has continued its about-face to earlier resistance to cryptocurrency in banking, issuing interpretive letters that say the institutions can — at their customers’ behest — buy and sell crypto assets in custody.

The newly explained policy stance released by the OCC on Wednesday also clarified that the bankers can outsource crypto activities to third parties, including custody and executive services. As long as it all still checks the boxes of the watchdog’s safety-and-soundness requirements, the OCC is giving the banks more crypto freedom.

This week’s move follows the agency’s March reversal of a longstanding policy that demanded bankers check with their government supervisors before moving ahead with new crypto business. “These letters signal a shift in the OCC’s approach,” Katherine Kirkpatrick Bos, Starkware general counsel and a former chief legal officer at Cboe Digital, noted on social media site X. She said the agency now seems to be melding crypto into traditional banking. And the additional guidance that third-parties are okay “is a boon to regulated crypto native service providers.” Read more

$45 Million Stolen from Coinbase Users in the Last Week

Vince Quill, CoinTelegraph

Coinbase users are falling prey to social engineering scams, costing users tens of millions of dollars in losses, according to ZachXBT.

Onchain sleuth and security analyst ZachXBT claims to have identified an additional $45 million in funds stolen from Coinbase users through social engineering scams in the past seven days alone.

According to the onchain detective, the $45 million figure represents the latest financial losses in a string of social engineering scams targeting Coinbase users, which ZachXBT said is a problem unique among crypto exchanges:

“Over the past few months, I have reported on nine figures stolen from Coinbase users via similar social engineering scams. Interestingly, no other major exchange has the same problem.” Cointelegraph reached out to Coinbase but was unable to get a response by the time of publication.

The claims made by ZachXBT place the total amount lost by Coinbase users to social engineering scams at $330 million annually and reflect the growing number of sophisticated attack strategies employed by threat actors to defraud crypto holders. Read more

Associations Urge Regulators to Remove Barriers for Banks Engaged in Digital Assets

Dave Kovaleski, Financial Regulation News

A group of financial services trade associations are urging the President’s Working Group on Digital Asset Markets to remove barriers to financial institutions engaging in digital asset activities.

A group of financial services trade associations are urging the President’s Working Group on Digital Asset Markets to remove barriers to financial institutions engaging in digital asset activities.

In a joint letter, the associations acknowledge the progress the Federal Reserve, the FDIC and the OCC have made in rescinding policies that have hindered the ability of banks to engage in digital asset activities. However, the associations recommend additional steps that can be taken to further advance bank innovation.

“The U.S. will not be able to achieve a leadership position in digital assets and financial technology under the status quo,” the letter to the working group states. “Banks are an essential component of the financial and payments systems and are governed by a comprehensive regulatory framework carefully crafted to mitigate the risks inherent to financial activities. It is therefore critical that the federal banking agencies take further steps to facilitate banks’ ability to engage in digital asset activities.”

The associations made three key recommendations in the letter:

- Create consistent rules across agencies. The federal banking agencies should coordinate to issue joint rules and guidance when possible. If joint guidance isn’t possible, the agencies should at least align their policies to avoid conflicting requirements.

- Regulate the activity, not the technology. The agencies should affirm that banks may engage in permissible banking activities regardless of the technology used. A tokenized asset is no different from the traditional form of that asset; therefore, the regulatory framework should be technology neutral. Read more

Musk’s xAI Joins TWG Global, Palantir for AI Push in Financial Sector

Jaspreet Singh, Reuters

Elon Musk’s artificial intelligence company xAI has partnered with Palantir Technologies (PLTR.O), and investment firm TWG Global, the companies said on Tuesday, as they look to tap growing AI demand in the financial services industry.

Elon Musk’s artificial intelligence company xAI has partnered with Palantir Technologies (PLTR.O), and investment firm TWG Global, the companies said on Tuesday, as they look to tap growing AI demand in the financial services industry.

The data analytics firm and TWG, led by Guggenheim Partners founder Mark Walter and entertainment financier Thomas Tull, had in March announced a joint venture aimed at AI deployment in financial services and insurance sectors.

TWG will lead the implementation efforts by working with company executives to design and deploy AI-powered solutions, the companies said. The collaboration will integrate xAI’s models, which include its Grok family of large language models and its Colossus supercomputer, into business operations. The companies expect “many more partners” after the inclusion of xAI.

Enterprise clients are investing in AI technologies to enhance services and introduce new features in their products, resulting in new partnerships for capturing market share. In March, Nvidia and xAI joined a consortium backed by Microsoft, investment fund MGX and BlackRock to expand AI infrastructure in the U.S.

May 2, 2025: AI & Digital Assets

- Stablecoin Regulation is Pending in Congress. Here Are Six Ways the Proposals Should be Improved.

- Relaxed Regulatory Oversight Spurs Bank-Crypto Activity

- Banks Ought to Be Aware of the Risks Arising from AI Adoption

- US Treasury’s OFAC Can’t Restore Tornado Cash Sanctions, Judge Rules

Stablecoin Regulation is Pending in Congress. Here Are Six Ways the Proposals Should be Improved.

Timothy Massad, Howell E. Jackson, and Dan Awrey, Atlantic Council

The US Congress may soon adopt legislation to regulate stablecoins—digital tokens pegged to the US dollar.

The US Congress may soon adopt legislation to regulate stablecoins—digital tokens pegged to the US dollar.

Although used today primarily to trade other crypto assets, stablecoins could become a widely used payment instrument, which would drive valuable innovation and competition. David Sacks, the Trump administration’s crypto “czar,” has predicted that stablecoins could also “ensure American dollar dominance internationally” and generate “trillions of dollars of demand for US Treasuries.” Stablecoin critics, in contrast, argue that legislation would legitimize a product that is widely used for money laundering and sanctions evasion while fueling crypto speculation and scams.

We have each advised or chaired executive branch agencies involved in digital asset policy and co-authored articles about why and how to regulate stablecoins. One of us testified before Congress in February about the pending legislation. We share concerns about the illicit use of stablecoins and the speculative nature of much of the crypto market. But stablecoin legislation is likely to be enacted this year: the Trump administration prioritized the issue in its digital assets executive order three days after the inauguration; Republican leaders in the Senate and House, together with Sacks, promised to pass stablecoin legislation within the administration’s first one hundred days; and bills have been passed out of both House and Senate committees. Therefore, the focus now should be on how best to regulate stablecoins, not whether to do so.

Stablecoins, moreover, already exist—the market capitalization is now over $230 billion and growing rapidly, almost all in dollar-pegged stablecoins. If the United States does not create a credible regulatory framework, the risks associated with stablecoins will only increase. In addition, the United States will lose the ability to set standards for liabilities denominated in its own currency because other jurisdictions are rapidly creating frameworks that permit dollar-based stablecoins.

The two bills under active consideration in Congress—the GENIUS Act in the Senate and the STABLE Act in the House—create some helpful guardrails to ensure that stablecoins are indeed stable. But the bills also contain significant flaws. Here are six ways that the bills must be improved to establish an effective stablecoin regulatory regime. Read more

Relaxed Regulatory Oversight Spurs Bank-Crypto Activity

Gabrielle Saulsbery, Banking Dive

Since January, federal bank regulators have done an about-face on crypto guidance, inspiring banks to explore the space.

Since January, federal bank regulators have done an about-face on crypto guidance, inspiring banks to explore the space.

Newly relaxed oversight on how banks deal in cryptocurrency has already inspired some activity between the two realms. Brian Foster, global head of wholesale at Coinbase, said his team has been “very busy meeting demand for banks, brokers and fintechs” now that federal banking regulators have walked back on the more skeptical bank-crypto guidance put forth under the Biden administration.

“Pretty much all of the large banks in the US are now doing something [to move forward with crypto] and we’re either behind the scenes with [or] live with pretty much all of them,” Foster said in an interview with Banking Dive. “Our [Request for Proposal] team is hard at work fielding all of these RFPs, so it’s definitely an all-out sprint,” Foster said.

Since January, federal bank regulators have done an about-face on crypto guidance. The Office of the Comptroller of the Currency, Federal Reserve and Federal Deposit Insurance Corp. have all withdrawn guidance that required banks to seek prior approval from regulators before dabbling in cryptocurrency. Regulators will instead monitor such activities through the normal supervisory process.

What’s changed, Foster said, isn’t banks’ intent to do something in crypto to meet customer demand. That’s “been there all along.” “What’s changing now is that the demand side has just been more pronounced as the market’s grown, coupled with the changing regulatory climate, which has just made the aperture for what’s possible a little bit wider and made the risk committees feel more comfortable,” Foster said. Read more

Banks Ought to Be Aware of the Risks Arising from AI Adoption

Samantha Barnes, International Banker

While financial institutions have been using artificial intelligence (AI) for many years, the introductions of generative artificial intelligence (GenAI) and large language models (LLMs) such as ChatGPT since late 2022 have ushered in a new and exciting era for the technology.

While financial institutions have been using artificial intelligence (AI) for many years, the introductions of generative artificial intelligence (GenAI) and large language models (LLMs) such as ChatGPT since late 2022 have ushered in a new and exciting era for the technology.

In turn, the uptake of AI-based applications and use cases within banks has never been more enthusiastic. Accompanying this frenzied adoption, however, are myriad risks of which banking sectors across the world ought to be aware.

Indeed, with the likes of big data, computational power, cloud computing and deep learning achieving giant developmental strides in recent years, powerful AI and machine learning (ML) applications are now on hand for banks to improve a variety of internal business operations, such as regulatory compliance, document summarisation, fraud detection and anti-money laundering (AML) capabilities, as well as client-facing solutions, such as virtual assistants and customer-personalisation tools.

However, as a December 2024 paper from the Bank for International Settlements (BIS) detailed, while the wider use of AI is likely to bring transformative benefits to the financial sector, it may also exacerbate existing risks. “The risks AI poses when used by financial institutions are largely the same risks financial authorities are typically concerned about,” according to the report. “These include micro-prudential risks, such as credit risk, insurance risk, model risk, operational risks, reputational risks; conduct or consumer protection risks; and macroprudential or financial stability risks.”

Similarly, a November 2024 report from the Financial Stability Board (FSB) observed that while AI offers benefits, such as improved operational efficiency, regulatory compliance, personalised financial products and advanced data analytics, it may also potentially amplify certain “financial sector vulnerabilities” and thereby pose risks to financial stability. Read more

US Treasury’s OFAC Can’t Restore Tornado Cash Sanctions, Judge Rules

Martin Young, Coin Telegraph

The US Treasury Department’s actions against Tornado Cash were unlawful, and it can’t sanction the crypto mixer again, a Texas federal court judge has ruled.

The US Treasury Department’s actions against Tornado Cash were unlawful, and it can’t sanction the crypto mixer again, a Texas federal court judge has ruled.

The US Treasury Department’s Office of Foreign Assets Control can’t restore or reimpose sanctions against the crypto mixing service Tornado Cash, a US federal court has ruled. Austin federal court judge Robert Pitman said in an April 28 judgment that OFAC’s sanctions on Tornado Cash were unlawful and that the agency was “permanently enjoined from enforcing” sanctions.

Tornado Cash users led by Joseph Van Loon had sued the Treasury, arguing that OFAC’s addition of the platform’s smart contract addresses to its Specially Designated Nationals and Blocked Persons (SDN) list was “not in accordance with law.” OFAC had sanctioned Tornado Cash in August 2022, accusing the protocol of helping launder crypto stolen by the North Korean hacking collective, the Lazarus Group.

The agency dropped the platform from the sanctions list on March 21 and argued that the matter was “moot” after a court ruled in favor of Tornado Cash in January. This latest amended ruling prevents OFAC from re-sanctioning Tornado Cash or putting it back on the blacklist. Initially, the court denied a motion for partial summary judgment and granted in favour of the Treasury. However, the Fifth Circuit reversed the decision and instructed the lower court to grant partial summary judgment to the plaintiffs, which led to the sanctions being revoked. Read more

Apr. 25 2025: AI & Digital Assets

- Bad Bots Are Taking Over the Web. Banks Are Their Top Target

- Crypto Drainers Now Sold as Easy-To-Use Malware at IT Industry Fairs

- SEC’s Crypto Custody Roundtable Begins Tomorrow, Here’s What You Should Know

- Coinbase Considers Applying for Federal Bank Charter

Bad Bots Are Taking Over the Web. Banks Are Their Top Target

Penny Crosman, American Banker

Internet insecurity has reached a new milestone: More web traffic (51%) now comes from bots, small pieces of software that run automated tasks, rather than humans, according to a new report.

Internet insecurity has reached a new milestone: More web traffic (51%) now comes from bots, small pieces of software that run automated tasks, rather than humans, according to a new report.

More than a third (37%) comes from so-called bad bots — bots designed to perform harmful activities, such as scraping sensitive data, spamming and launching denial-of-service attacks — for which banks are a top target. (“Good bots,” such as search engine crawlers that index content, account for 14% of web activity.)

About 40% of bot attacks on application programming interfaces in 2024 were directed at the financial sector, according to the 2025 Bad Bot Report from Imperva, a Thales company. Almost a third of those (31%) involved scraping sensitive or proprietary data from APIs, 26% were payment fraud bots that exploited vulnerabilities in checkout systems to trigger unauthorized transactions and 12% were account takeover attacks in which bots used stolen or brute-forced credentials to gain unauthorized access to user accounts, then commit a breach or theft from there.

For the report, researchers analyzed bot attack data for more than 4,500 customers, 53,000 customer accounts and more than 200,000 customer sites. So this report is not a complete representation of all internet activity, but experts say it matches what they are seeing in the field. Read more

Crypto Drainers Now Sold as Easy-To-Use Malware at IT Industry Fairs

Adrian Zmudzinski, CoinTelegraph

Crypto drainers have evolved into an accessible, professionalized software-as-a-service industry, enabling low-skill actors to steal cryptocurrency.

Crypto drainers have evolved into an accessible, professionalized software-as-a-service industry, enabling low-skill actors to steal cryptocurrency.

Crypto drainers, malware designed to steal cryptocurrency, have become easier to access as the ecosystem evolves into a software-as-a-service (SaaS) business model.

In an April 22 report, crypto forensics and compliance firm AMLBot revealed that many drainer operations have transitioned to a SaaS model known as drainer-as-a-service (DaaS). The report revealed that malware spreaders can rent a drainer for as little as 100 to 300 USDT

AMLBot CEO Slava Demchuk told Cointelegraph that “previously, entering the world of cryptocurrency scams required a fair amount of technical knowledge.” That is no longer the case. Under the DaaS model, “getting started isn’t significantly more difficult than with other types of cybercrime.”

Demchuk explained that would-be drainer users join online communities to learn from experienced scammers who provide guides and tutorials. This is how many criminals involved with traditional phishing campaigns transition to the crypto drainer space. Read more

SEC’s Crypto Custody Roundtable Begins Tomorrow, Here’s What You Should Know

Callan Quinn, Decrypt

As crypto custody rules face scrutiny, the SEC gathers industry voices to debate the future of safeguarding digital assets.

As crypto custody rules face scrutiny, the SEC gathers industry voices to debate the future of safeguarding digital assets.

In brief

- The session is the second in a four-part SEC roundtable series designed to address key regulatory issues in the crypto industry.

- Senior SEC officials, legal scholars, and executives from top crypto firms will participate in discussions on custody compliance.

- Industry participants have voiced concerns that existing SEC rules do not adequately reflect the operational realities of digital asset markets.

The U.S. Securities and Exchange Commission will convene its second crypto policy roundtable on Friday, focusing on crypto asset custody rules and regulatory gaps. The event is part of a four-part series launched by the SEC’s Crypto Task Force to gather input and discuss policy options around digital asset regulation.

Opening remarks will come from several senior SEC figures, including new Chairman Paul S. Atkins, who was sworn in earlier this week and has vowed to bring regulatory clarity to the crypto industry. The roundtable will feature two panels: one on “Custody Through Broker-Dealers and Beyond” and the second on “Investment Adviser and Investment Company Custody.” Read more

Coinbase Considers Applying for Federal Bank Charter

Gabrielle Saulsbery, Banking Dive

Regulatory willingness to work with crypto seems to have inspired Coinbase, and reportedly others, to engrain themselves deeper into the traditional financial sector.

Regulatory willingness to work with crypto seems to have inspired Coinbase, and reportedly others, to engrain themselves deeper into the traditional financial sector.

Coinbase is considering applying for a federal bank charter, a company spokesperson confirmed, as cryptocurrency firms seek to capitalize on regulatory amiability. “This is something Coinbase is actively considering but has not made any formal decisions yet,” the spokesperson told Banking Dive, without elaboration.

Coinbase is one of four crypto companies identified by the Wall Street Journal Monday as currently planning to seek a bank charter, alongside crypto custodian BitGo and stablecoin issuers Circle and Paxos. Spokespeople for Paxos, Circle and BitGo declined to comment.

Just one crypto-native bank has a federal bank charter: Anchorage Digital scored a national trust charter from the Office of the Comptroller of the Currency in 2021. Paxos and a third firm, Protego, received conditional OCC charters that year, too, though the charters expired in 2023 before the firms were able to meet the charters’ conditions. Read more

Apr. 18, 2025: AI & Digital Assets

- Limiting the Risky Side of AI in Financial Services

- Can Bitcoin Save America?: CoinDesk Spotlight with Senator Cynthia Lummis

- As the U.S. Shrinks Back, the World Moves Forward on CDBCs

- California DFPI Proposes Digital Asset Licensing Rule

Limiting the Risky Side of AI in Financial Services

Greg Anderson, BAI

Banks should monitor ‘poisoned’ AI, set rules for employee use of this technology and regularly invest in security.

Banks should monitor ‘poisoned’ AI, set rules for employee use of this technology and regularly invest in security.

Artificial intelligence (AI) has permeated nearly every facet of our lives, from our phones to our internet searches and beyond. Banks and other financial institutions are no exception: By one count, 72% of these organizations have already adopted AI.

As leaders pursue any competitive edge they can, the majority (66%) are willing to accept most risks of AI. However, AI can pose very real threats. To truly make the most of AI investments, consider taking these steps to better secure your business.

Preventing ‘poisoned’ AI

Have you read the stories about Reddit users attempting to keep tourists out of their favorite local restaurants by positively reviewing other places so these dining options are more likely to show up in an AI summary? It seems harmless, but the same methodology can be used for far more nefarious purposes. These are called side-channel attacks, and AI models connected to or using any kind of large language model (LLM) are susceptible to them.

For example, a bad actor can publish a fake repository that looks nearly identical to a legitimate one, then “seed” mentions of it on Reddit and other sites where models are being trained to poison all code the AI will generate, giving the bad actor access to any of your sensitive systems and data that poisoned code is used on. Read more

Can Bitcoin Save America?: CoinDesk Spotlight with Senator Cynthia Lummis

Coindesk

Senator Cynthia Lummis joins “CoinDesk Spotlight” to discuss her enthusiasm for establishing a strategic bitcoin reserve and how it could alleviate the U.S. national debt. Plus, she details the potential of bitcoin’s decentralized nature and why bipartisan cooperation in Congress is crucial for regulatory developments in the digital assets industry. This content should not be construed or relied upon as investment advice. It is for entertainment and general information purposes.

Senator Cynthia Lummis joins “CoinDesk Spotlight” to discuss her enthusiasm for establishing a strategic bitcoin reserve and how it could alleviate the U.S. national debt. Plus, she details the potential of bitcoin’s decentralized nature and why bipartisan cooperation in Congress is crucial for regulatory developments in the digital assets industry. This content should not be construed or relied upon as investment advice. It is for entertainment and general information purposes.

As the U.S. Shrinks Back, the World Moves Forward on CDBCs

Tom Nawrocki, Payments Journal

Although the United States has shown little interest in establishing a central bank digital currency (CBDC) of its own, other nations around the world continue to advance their research and pilot programs.

Although the United States has shown little interest in establishing a central bank digital currency (CBDC) of its own, other nations around the world continue to advance their research and pilot programs.

The Bank for International Settlements (BIS), which has taken the lead in the development of CDBCs, announced six new blockchain-related projects last year.

However, the landscape isn’t yet fully cleared for CBDCs to take center stage. A report from Javelin Strategy & Research, CBDCs: Where Are We Now?, looks at the progress of key projects around the world, why the UK is emerging as a leader in the technology, and how these initiatives are likely to shape the future of global financial services.

The “Central Bank of Central Banks”

Established in 1830, the BIS is owned by a consortium of central banks around the world, with both the Federal Reserve Bank of New York and the U.S. Federal Reserve being members. It has been at the forefront of CBDC development, driving collaboration, research, and the testing of real-world applications.

“Being the central bank of central banks in some ways, they recognized the need for digital transformation in central banking early on,” said Joel Hugentobler, Cryptocurrency Analyst at Javelin Strategy & Research and author of the report. “They established the BIS Innovation Hub in 2019 focusing on CBDCs, tokenization, and digital payments. They’ve played a crucial role in research and experimentation with CBDCs and led collaborative projects worldwide, given their position.” Read more

California DFPI Proposes Digital Asset Licensing Rule

A.J. S. Dhaliwal, Mehul N. Madia, Maxwell Earp-Thomas of Sheppard, Mullin, Richter & Hampton LLP – Consumer Finance and Fintech Blog/National Law Review

On April 4, the California Department of Financial Protection and Innovation (DFPI) issued proposed regulations under the Digital Financial Assets Law (DFAL). The proposal provides clarification on DFAL’s licensing framework and identifies when digital asset activity may qualify for exemptions under California’s Money Transmission Act.

On April 4, the California Department of Financial Protection and Innovation (DFPI) issued proposed regulations under the Digital Financial Assets Law (DFAL). The proposal provides clarification on DFAL’s licensing framework and identifies when digital asset activity may qualify for exemptions under California’s Money Transmission Act.

The proposal builds on legislation passed in 2023 and 2024—including Assembly Bill 39, Senate Bill 401, and Assembly Bill 1934—which established the DFAL and later pushed back its implementation deadline. Beginning July 1, 2026, companies engaging in covered digital financial asset business activity with or on behalf of California residents must be licensed by DFPI, have a pending application on file, or qualify for an exemption.

The proposed regulations aim to implement the Digital Financial Assets Law by clarifying licensing procedures, exemptions, and reporting obligations. The rule is intended to enhance transparency, improve oversight, and support the development of a safe, regulated digital asset market in California. Read more

Apr. 11, 2025: AI & Digital Assets

- Are Stablecoins a Threat or an Opportunity for Banks?

- DOJ Ends Crypto Enforcement Team; Shifts Focus to Terrorism and Fraud

- CSBS Flags Key Risks in Draft Stablecoin Legislation

- Strategy Reports Unrealized $5.91B Loss on Digital Assets

Are Stablecoins a Threat or an Opportunity for Banks?

Pymnts.com

Banks have always evolved to provide more effective systems to serve their customers. But while historically that evolution was relatively linear, as the financial world continues to digitize, banks are faced with a growing array of options to meet customer needs.

Banks have always evolved to provide more effective systems to serve their customers. But while historically that evolution was relatively linear, as the financial world continues to digitize, banks are faced with a growing array of options to meet customer needs.

Stablecoins — cryptocurrencies pegged to fiat currencies such as the U.S. dollar — have moved from niche assets to instruments that could redefine how money moves, where it is stored and who controls it. For traditional banks, stablecoins represent a dual-edged proposition: both a disruptive force and a potential avenue for innovation. With the news that Tether is considering offering a U.S.-only stablecoin, the central question is whether banks will be disintermediated, or whether they can co-opt the technology to evolve their roles in a changing financial landscape.

The U.S. Securities and Exchange Commission’s (SEC) Division of Corporate Finance also earlier determined that stablecoins are not securities and do not need to be registered, and the shifting domestic regulatory landscape has opened the door for financial service stakeholders to dip their toes into the crypto space with the Office of the Comptroller of the Currency (OCC) reclarifying certain crypto banking permissions last month.

The potential for banks to act as custodians, compliance partners or even validators in blockchain networks could open up avenues for growth that align with their core strengths. In today’s changing landscape, inertia is increasingly no longer an option.

The Deposit Drain Dilemma

At the heart of the issue is the role of bank deposits in the financial system. Banks rely on customer deposits to fund loans and support economic activity at the local level. Stablecoins, if adopted widely, could potentially threaten to siphon off those deposits. Users might prefer holding stablecoins in digital wallets over parking money in checking or savings accounts. This potential migration of capital could starve traditional banks of their primary funding source, challenging their ability to lend and compete. Read more

DOJ Ends Crypto Enforcement Team; Shifts Focus to Terrorism and Fraud

MacKenzie Sigalos, CNBC

Key Points

- The U.S. Justice Department closed the National Cryptocurrency Enforcement Team.

U.S. attorney’s offices will now take the lead on digital asset cases, focusing primarily on crimes involving terrorism. - This is the latest in a sweeping set of pro-crypto moves under President Donald Trump aimed at rolling back what his administration views as regulatory overreach by the Biden administration.

The U.S. Justice Department abruptly shut down its National Cryptocurrency Enforcement Team, signaling a major shift in how the federal government will handle crypto-related crimes going forward, according to a memo sent Monday night by Deputy Attorney General Todd Blanche.

The U.S. Justice Department abruptly shut down its National Cryptocurrency Enforcement Team, signaling a major shift in how the federal government will handle crypto-related crimes going forward, according to a memo sent Monday night by Deputy Attorney General Todd Blanche.

In it, Blanche outlines a decentralized approach in which U.S. attorney’s offices will now take the lead on digital asset cases, focusing primarily on crimes involving terrorism.

Going forward, the document said efforts would now focus on “prosecuting individuals who victimize digital asset investors, or those who use digital assets in furtherance of criminal offenses such as terrorism, narcotics and human trafficking, organized crime, hacking, and cartel and gang financing.”

The disbandment of the unit is the latest in a series of sweeping regulatory reversals under President Donald Trump, who made crypto-friendly policies a centerpiece of his 2024 campaign. Read more

CSBS Flags Key Risks in Draft Stablecoin Legislation

James G. Gatto, Alexander Lazar, & Maxwell Earp-Thomas; Sheppard, Mullin, Richter & Hampton LLP Law of the Ledger/National Law Review

On April 1, the Conference of State Bank Supervisors (CSBS) submitted a letter to the House Financial Services Committee expressing concerns with an introduced draft of H.R. 2392—the Stablecoin Transparency and Accountability for a Better Ledger Economy (STABLE) Act of 2025 (the “Act”)—which purports to establish a comprehensive regulatory framework for payment stablecoins in the U.S.

On April 1, the Conference of State Bank Supervisors (CSBS) submitted a letter to the House Financial Services Committee expressing concerns with an introduced draft of H.R. 2392—the Stablecoin Transparency and Accountability for a Better Ledger Economy (STABLE) Act of 2025 (the “Act”)—which purports to establish a comprehensive regulatory framework for payment stablecoins in the U.S.

In the letter, CSBS expresses support for the development of a national framework for payment stablecoin issuers (PSIs), while warning that the current draft would unnecessarily preempt state regulatory authority and introduce risks to consumer protection and financial stability.

CSBS contended that, as currently drafted, the Act would centralize excessive authority over the stablecoin industry in a single federal agency, likely the OCC, undermining the dual banking system. The letter also emphasized that states already regulate over $50 billion in stablecoin activity and called on Congress to retain the benefits of a cooperative federal-state oversight model.

The letter identified 5 key changes needed to preserve the United States’ longstanding cooperative federalism model for the banking system and mitigate related risk factors, including:

- Limiting PSI activities to stablecoin issuance. The proposed draft of the Act allows PSIs to engage in non-stablecoin-related financial activities. The CSBS argues that this, in combination with the Act’s capital and liquidity restrictions, increases operational and liquidity risks that could destabilize the market.

- Removing unnecessary preemption of state authority. As drafted, the Act would expand federal preemption to (i) the parent of a federal PSI, (ii) state authority over PSI subsidiaries of national banks, (iii) PSI subsidiaries of state-chartered banks, and (iv) other non-stablecoin activities approved by federal regulators. Read more

Strategy Reports Unrealized $5.91B Loss on Digital Assets

Maura Webber Sadovi, Yahoo Finance/CFO Dive

Dive Brief:

Dive Brief:

- Strategy, a software and bitcoin treasury company formerly known as MicroStrategy, reported an unrealized $5.91 billion loss on digital assets for the quarter ended March 31, which it expects will result in a net loss for the quarter that will be partly offset by a $1.69 billion income tax benefit, according to a Monday business update on its bitcoin holdings and other matters contained in a securities filing.

- The Tysons Corner, Virginia-based company’s unaudited report comes as bitcoin’s market value has fallen back to levels seen just after President Trump’s election, and as new generally accepted accounting standards for certain crypto assets including bitcoin went into effect late last year, requiring companies to account for the assets at fair value rather than treating them as an intangible asset.

- “We have adopted ASU 2023-08 [the Financial Accounting Standard Board’s accounting standards update] as of January 1, 2025,” the company, one of the largest corporate holders of bitcoin, said in its filing. “The standard is now effective, and we have applied a cumulative-effect net increase to the opening balance of retained earnings as of January 1, 2025, of $12.745 billion. Due in particular to the volatility in the price of bitcoin, we expect the adoption of ASU 2023-08 to have a material impact on our financial results, increase the volatility of our financial results and affect the carrying value of our bitcoin on our balance sheet.”

Dive Insight:

Finance leaders that hold bitcoin are grappling with a number of moving parts as they close their books on the first quarter since new accounting standards for certain digital assets went into effect amid a bitcoin slump. Read more

Apr. 4, 2025: AI & Digital Assets

- FDIC: Banks Can Engage in Crypto-Related Activities Without Prior Notice

- Crypto Has a Regulatory Capture Problem in Washington — Or Does It?

- Stablecoins Keep Racking Up Milestones, but Can They Crack B2B Payments?

- Investments in AI and Digital Asset Surge While Data and Legacy Tech Challenges Persist, Broadridge Digital Transformation Study Finds

FDIC: Banks Can Engage in Crypto-Related Activities Without Prior Notice

Cooley

On March 28, 2025, the Federal Deposit Insurance Corporation (FDIC) clarified that FDIC-supervised institutions do not need to provide notice or obtain approval from the FDIC prior to engaging in crypto-related activities.

On March 28, 2025, the Federal Deposit Insurance Corporation (FDIC) clarified that FDIC-supervised institutions do not need to provide notice or obtain approval from the FDIC prior to engaging in crypto-related activities.

This guidance rescinds prior guidance issued in 2022, which required FDIC-supervised institutions to notify the FDIC before engaging in any crypto-related activities.

New guidance

Financial Institution Letter (FIL-7-2025) provides that FDIC-supervised institutions may engage in “permissible activities, including activities involving new and emerging technologies such as crypto-assets and digital assets” without notifying the FDIC in advance, provided such institutions “adequately manage the associated risks” and “conduct all activities in a safe and sound manner and consistent with all applicable laws and regulations.” The FDIC notes that associated risks may include market and liquidity risks, operational and cybersecurity risks, consumer protection requirements, and anti-money laundering requirements.

“Crypto-related activities” include, without limitation, “acting as crypto-asset custodians; maintaining stablecoin reserves; issuing crypto and other digital assets; acting as market makers or exchange or redemption agents; participating in blockchain- and distributed ledger-based settlement or payment systems, including performing node functions; as well as related activities such as finder activities and lending.”

Change from prior guidance

This latest guidance rescinds 2022 guidance requiring FDIC-supervised institutions to notify the FDIC prior to engaging in any crypto-related activities. That guidance provided that, following such notification, the FDIC would request “information necessary to allow the agency to assess the safety and soundness, consumer protection, and financial stability implications of such activities.” The FDIC implemented that procedure in response to what it described as “significant safety and soundness risks, as well as financial stability and consumer protection concerns” posed by crypto-related activities. Read more

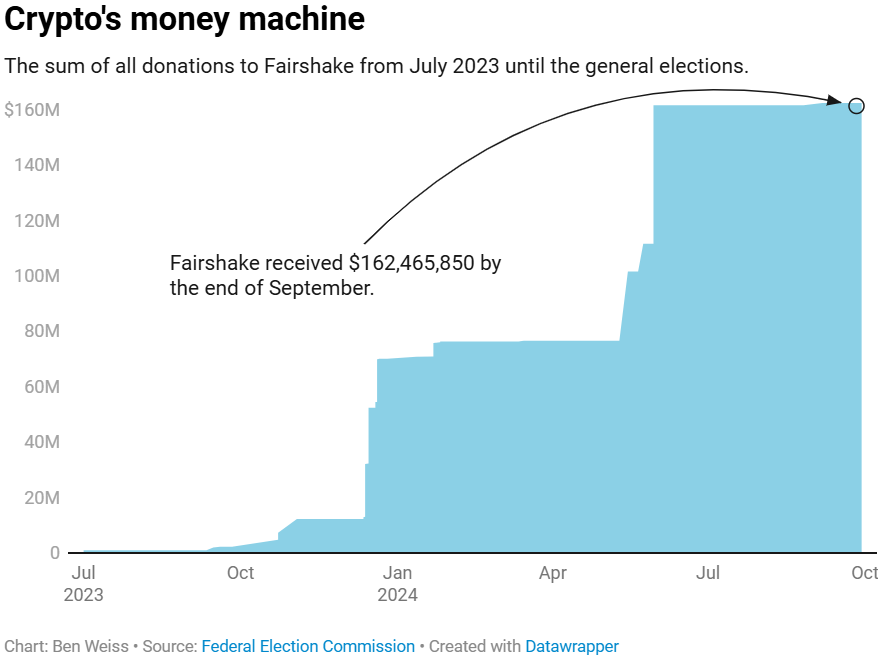

Crypto Has a Regulatory Capture Problem in Washington — Or Does It?

Aaron Wood, CoinTelegraph

The crypto industry’s sway in Washington, DC, has made it more likely that the industry will get beneficial legislation, but it’s also creating problems.

The crypto industry’s sway in Washington, DC, has made it more likely that the industry will get beneficial legislation, but it’s also creating problems.

Concerns of regulatory capture — a situation in which regulators or lawmakers are co-opted to serve the interests of a small constituency — have grown as crypto lobbying gains influence in Washington.

The risks of regulatory capture are twofold: First, the public interest is shut out from policy-making in favor of a single industry or company, and second, it can make regulators blind to or paralyzed by economic risks.

Now, not even three months into Trump’s presidency, American lawmakers and industry crypto observers have voiced concerns that this regulatory capture could not only negatively affect the country but curb competition within the crypto industry as well.

Regulatory capture in the battle for crypto policy

In a March 28 letter, prominent members of the US Senate Banking Committee and Committee on Finance addressed Acting Comptroller Rodney Hood and Michelle Bowman, chair of the Federal Reserve Board of Governor’s Committee on Supervision and Regulation. Read more

Stablecoins Keep Racking Up Milestones, but Can They Crack B2B Payments?

Pymnts.com

The allure of stablecoins is straightforward. They make payments simple by promising the efficiency of cryptocurrencies without the volatility.

The allure of stablecoins is straightforward. They make payments simple by promising the efficiency of cryptocurrencies without the volatility.

There are few places where efficiency matters more than B2B payments. The global B2B payments market is valued at over $125 trillion annually. Yet, the sector remains plagued by inefficiencies that include high transaction fees, slow settlement times and cumbersome processes involving intermediaries.

These issues are particularly pronounced in cross-border transactions, where settlement can take days, and stacked fees can eat up chunks of each transaction. Stablecoins seem to observers like a natural fit for what ails B2B. With near-instant settlement, reduced costs and transparency through blockchain technology, stablecoins could address many of the pain points associated with traditional payment systems.

However, as stablecoins continue to rack up milestones unimaginable just a few years ago, the question remains, will they?

Embracing Blockchain for Business Payments

When it comes to the embrace of stablecoins, B2B payments present a different challenge than consumer-facing applications. Businesses demand not only efficiency but also compliance, integration with legacy systems, and certainty in regulatory frameworks. Read more

Investments in AI and Digital Asset Surge While Data and Legacy Tech Challenges Persist, Broadridge Digital Transformation Study Finds

Broadridge Financial Solutions/Stock Titan

Broadridge Financial Solutions has released its fifth annual Digital Transformation & Next-Gen Technology Study, revealing significant trends in financial services technology adoption. 80% of firms are making moderate-to-large investments in AI this year, with 72% specifically investing in GenAI, up from 40% in 2024.

Broadridge Financial Solutions has released its fifth annual Digital Transformation & Next-Gen Technology Study, revealing significant trends in financial services technology adoption. 80% of firms are making moderate-to-large investments in AI this year, with 72% specifically investing in GenAI, up from 40% in 2024.

The study highlights that 41% of executives feel their technology strategy isn’t moving fast enough, while 46% believe legacy tech is hampering resiliency. Firms plan to allocate 29% of their total IT spend to technology innovation over the next two years, a seven percentage point increase from last year.

Key findings include:

- 71% of firms are making major investments in blockchain and DLT, up from 59% in 2024

- 64% are making significant cryptocurrency investments, up from 51% in 2024

- 86% of firms are integrating cloud technology

- 58% identify data harmonization as important for maximizing ROI

- 40% admit to having data quality issues

Mar. 21, 2025: AI & Digital Assets

- US Treasury Argues No Need for Final Court Judgment in Tornado Cash Case

- Crypto for Advisors: What Is a Bitcoin Strategic Reserve?

- AI Trends in Financial Services

- Crypto Clarity: OCC’s New Guidelines Pave the Way for Banking Innovation

US Treasury Argues No Need for Final Court Judgment in Tornado Cash Case

Stephen Katte, Coin Telegraph

The US Treasury says because it dropped Tornado Cash from its sanctions list on March 21, the legal challenge against it should be considered moot.

The US Treasury says because it dropped Tornado Cash from its sanctions list on March 21, the legal challenge against it should be considered moot.

The US Treasury Department says there is no need for a final court judgment in a lawsuit over its sanctioning of Tornado Cash after dropping the crypto mixer from the sanctions list. In August 2022, Treasury’s Office of Foreign Assets Control (OFAC) sanctioned Tornado Cash after alleging the protocol helped launder crypto stolen by North Korean hacking crew the Lazarus Group, leading to a number of Tornado Cash users filing a lawsuit against the regulator.

After a court ruling in favor of Tornado Cash, the US Treasury dropped the mixer from its sanctions list on March 21, along with several dozen Tornado-affiliated smart contract addresses from the Specially Designated Nationals (SDN) list, and has now argued “this matter is now moot.”

“Because this court, like all federal courts, has a continuing obligation to satisfy itself that it possesses Article III jurisdiction over the case, briefing on mootness is warranted,” the US Treasury said. However, Coinbase chief legal officer Paul Grewal said the Treasury’s hope to have the case declared moot before an official judgment can be made isn’t the correct legal process.

“After grudgingly delisting TC, they now claim they’ve mooted any need for a final court judgment. But that’s not the law, and they know it,” he said. “Under the voluntary cessation exception, a defendant’s decision to end a challenged practice moots a case only if the defendant can show that the practice cannot ‘reasonably be expected to recur.’”

Grewal pointed to a 2024 Supreme Court ruling that found a legal complaint from Yonas Fikre, a US citizen who was put on the No Fly List, is not moot by taking him off the list because the ban could be reinstated again at a later date. Read more

Crypto for Advisors: What Is a Bitcoin Strategic Reserve?

Alex Tapscott, Coindesk

The U.S. advances plans for its Bitcoin Strategic Reserve as part of its goal of becoming a leader in digital assets. What’s the current state of the Reserve and why does it matter?

The U.S. advances plans for its Bitcoin Strategic Reserve as part of its goal of becoming a leader in digital assets. What’s the current state of the Reserve and why does it matter?

In today’s crypto for advisors, Alex Tapscott explains what the Bitcoin Strategic Reserve is and why it matters to investors. Then, Bryan Courchesne from DAIM answers questions investors have about setting up a personal strategic reserve in Ask an Expert.

Will Trump’s Bitcoin Reserve Move the Needle?

On March 7, President Trump signed an executive order creating both a Strategic Bitcoin Reserve and a U.S. Digital Asset Stockpile, the latter comprised of tokens like ETH, SOL, XRP and ADA. The Strategic Bitcoin Reserve (SBR) and the Digital Asset Stockpile will be capitalized initially with crypto assets obtained by the Department of Treasury through criminal and civil asset forfeiture. Analysts estimate that they will capitalize the SBR with $6.9 billion in bitcoin currently in the government wallet.

The news disappointed some bitcoin bulls, who were annoyed by the inclusion of other crypto assets and by the relatively modest initial goals of the Reserve. Altcoin fans were initially euphoric following Trump’s tweet announcing the plan but soon became disillusioned as it became evident that the plan for the U.S. Digital Asset Stockpile was severely limited in scope — the government sits on only $400 million of non-BTC coins and has no intention of adding more.

So what should we make of all this?

The idea of a strategic reserve for critical assets or commodities is not new. The U.S. government maintains strategic stockpiles of gold and petroleum, and governments and central banks hold large balances of foreign currencies, for example. Read more

AI Trends in Financial Services

Adam Lieberman, Finastra/Finextra

In this first quarter of 2025, AI advancements are continuing apace. In the realm of financial services, use cases must adhere to restrictions and regulations around data usage, but there are a number of areas that are gaining significant traction and will continue to mature over the next year. Here are six trends that I see gaining traction and delivering value.

In this first quarter of 2025, AI advancements are continuing apace. In the realm of financial services, use cases must adhere to restrictions and regulations around data usage, but there are a number of areas that are gaining significant traction and will continue to mature over the next year. Here are six trends that I see gaining traction and delivering value.

Increased usage of multimodal models

Recent advances in multimodal large language models are set to unlock a plethora of opportunities for financial services organizations in the year ahead. As the name suggests, multimodal models are trained to handle data and inputs from multiple sources, such as text, images, video and audio.

To date we’ve seen financial services organizations focusing more on the text capabilities of Gen AI – instructing models via text-based prompts to access and summarize written information stored in a variety of formats across multiple systems. Going forward, the increased usage of voice will provide additional methods of interaction with models.

Harnessing the capabilities of multimodal models, and their ability to process different formats of data simultaneously, will be hugely important. Financial services professionals will be able to orchestrate the creation of multimedia presentations and reports – distilling information stored in different formats in systems across the organization into timely and actionable insights.

A key use case for these models in financial services will be accessing and unlocking the potential of unstructured data that sits in business silos. For example, transcribing customer calls from audio to text, or extracting data from paper-based documents that have been scanned and digitized, to make the data more accessible. Read more

Crypto Clarity: OCC’s New Guidelines Pave the Way for Banking Innovation

Kelly A. Lenahan-Pfahlert, Ballard Spahr

On March 7, 2025, the Office of the Comptroller of the Currency (“OCC”) released Interpretive Letter 1183, marking a pivotal change in regulatory guidance for national banks and federal savings associations engaging in cryptocurrency activities. This recent directive, issued under Acting Comptroller Rodney Hood, rescinds the requirements set by Interpretive Letter 1179 from November 2021. The updated guidance reaffirms the permissibility of certain crypto-related activities while eliminating the need for prior supervisory non-objection.

On March 7, 2025, the Office of the Comptroller of the Currency (“OCC”) released Interpretive Letter 1183, marking a pivotal change in regulatory guidance for national banks and federal savings associations engaging in cryptocurrency activities. This recent directive, issued under Acting Comptroller Rodney Hood, rescinds the requirements set by Interpretive Letter 1179 from November 2021. The updated guidance reaffirms the permissibility of certain crypto-related activities while eliminating the need for prior supervisory non-objection.

Background

Interpretive Letter 1183 marks a significant regulatory update under Acting Comptroller Rodney Hood, who assumed his role earlier this year. This letter aims to standardize how banks engage with digital assets, removing the constraints imposed by previous guidance. The rescinded directive from former Acting Comptroller Michael Hsu required banks to seek supervisory approval before participating in crypto activities—a process that was often seen as a barrier to innovation and growth in the sector.

In contrast, the new guidance focuses on reducing regulatory burdens and fostering transparency, thereby encouraging responsible innovation within the banking industry. The OCC continues to support activities outlined in earlier interpretive letters, such as crypto asset custody (IL 1170), stablecoin reserves (IL 1172), and blockchain payment facilitation (IL 1174), initially introduced under former Comptroller Brian Brooks. Read more

Mar. 21, 2025: AI & Digital Assets

- OpenAI Asks White House for Relief from State AI Rules

- Fintech and Crypto Firms Eye U.S. State Bank Licenses Amid Trump Administration

- Microsoft Identifies Remote Access Trojan Built to Drain Crypto Wallets

- AI Is Making Organized Crime Worse in the EU, Europol Warns

OpenAI Asks White House for Relief from State AI Rules

Bloomberg

OpenAI has asked the Trump administration to help shield artificial intelligence companies from a growing number of proposed state regulations if they voluntarily share their models with the federal government.

OpenAI has asked the Trump administration to help shield artificial intelligence companies from a growing number of proposed state regulations if they voluntarily share their models with the federal government.

In a 15-page set of policy suggestions released on Thursday, the ChatGPT maker argued that the hundreds of AI-related bills currently pending across the US risk undercutting America’s technological progress at a time when it faces renewed competition from China. OpenAI said the administration should consider providing some relief for AI companies big and small from state rules – if and when enacted – in exchange for voluntary access to models.

The recommendation was one of several included in OpenAI’s response to a request for public input issued by the White House Office of Science and Technology Policy in February as the administration drafts a new policy to ensure US dominance in AI. President Donald Trump previously rescinded the Biden administration’s sprawling executive order on AI and tasked the science office with developing an AI Action Plan by July.

To date, there has been a notable absence of federal legislation governing the AI sector. The Trump administration has generally signaled its intention to take a hands-off approach to regulating the technology. But many states are actively weighing new measures on everything from deepfakes to bias in AI systems. Read more

Fintech and Crypto Firms Eye U.S. State Bank Licenses Amid Trump Administration

Binance News

According to PANews, several fintech and cryptocurrency companies are exploring the possibility of becoming state-chartered banks in the United States under the administration of U.S. President Donald Trump.

According to PANews, several fintech and cryptocurrency companies are exploring the possibility of becoming state-chartered banks in the United States under the administration of U.S. President Donald Trump.

Industry executives believe that the current administration is more favorable towards their sector, providing an opportunity to obtain licenses that regulatory bodies were previously slow or reluctant to approve.

Alexandra Steinberg Barrage, a partner at Troutman Pepper Locke, noted an increase in interest, with several applications currently being processed. However, she emphasized that the trend has not fully developed, as clients remain cautiously optimistic, awaiting stability as the government appoints new banking regulators.

Two sources involved in potential applications reported a significant rise in discussions and preparations for bank licenses, though the number of companies that will proceed remains uncertain.

While becoming a bank subjects institutions to more regulatory scrutiny, it can also reduce capital and operational costs in certain scenarios. Licenses can enhance a company’s legitimacy in the eyes of customers and expand business and market opportunities. Read more

Microsoft Identifies Remote Access Trojan Built to Drain Crypto Wallets

Wesley Grant, Payments Journal

Sophisticated malware is becoming an increasingly potent threat, as evidenced by the remote access trojan (RAT) that was recently discovered by Microsoft.

Sophisticated malware is becoming an increasingly potent threat, as evidenced by the remote access trojan (RAT) that was recently discovered by Microsoft.

Dubbed StilachiRAT, the malware is designed to scan the Google Chrome browser for any of 20 crypto wallet extensions, including platforms like Coinbase Wallet, MetaMask, and Trust Wallet.

According to Microsoft, once the RAT detects a crypto wallet, it employs various techniques to siphon information from the system. These include extracting saved browser credentials and monitoring clipboard activity for passwords or crypto keys. Once this sensitive data falls into the hands of bad actors, they can quickly drain the victim’s crypto wallet.

Bringing Awareness to the Capabilities

Microsoft first discovered evidence of StilachiRAT in November, and the tech firm said that it hasn’t yet been able to identify the cybercriminals behind the malware.

Though the RAT hasn’t yet gained widespread traction, Microsoft felt it was necessary to raise awareness about the malware due to its capabilities, the rapid evolution of the malware ecosystem, and to help reduce the number of potential victims. Read more

AI Is Making Organized Crime Worse in the EU, Europol Warns

Associated Press/Fast Company

A new report said AI-driven attacks range from drug trafficking to people smuggling, money laundering, cyber attacks, and online scams.

A new report said AI-driven attacks range from drug trafficking to people smuggling, money laundering, cyber attacks, and online scams.

The European Union’s law enforcement agency cautioned Tuesday that artificial intelligence is turbocharging organized crime that is eroding the foundations of societies across the 27-nation bloc as it becomes intertwined with state-sponsored destabilization campaigns.

The grim warning came at the launch of the latest edition of a report on organized crime published every four years by Europol that is compiled using data from police across the EU and will help shape law enforcement policy in the bloc in coming years.

“Cybercrime is evolving into a digital arms race targeting governments, businesses and individuals. AI-driven attacks are becoming more precise and devastating,” said Europol’s Executive Director Catherine De Bolle. “Some attacks show a combination of motives of profit and destabilization, as they are increasingly state-aligned and ideologically motivated,” she added.

The report, the EU Serious and Organized Crime Threat Assessment 2025, said offenses ranging from drug trafficking to people smuggling, money laundering, cyber attacks and online scams undermine society and the rule of law “by generating illicit proceeds, spreading violence, and normalizing corruption.”

The volume of child sexual abuse material available online has increased significantly because of AI, which makes it more difficult to analyze imagery and identify offenders, the report said. Read more

RELATED READING: ‘AI Valley’ Author Worries There’s ‘So Much Power in The Hands of Few People’

Mar. 14, 2025: AI & Digital Assets

- Banking on AI: How Lockdowns Paved the Way For A Generative Future

- U.S. Senate’s Banking Chair Pushes Debanking Bill After Crypto Uproar

- Beyond AI Regulation: How Government and Industry Can Team Up to Make the Technology Safer Without Hindering Innovation

- PODCAST Lawfare Daily: Carla Reyes and Drew Hinkes on the Evolution and Future of Crypto Policy

Banking on AI: How Lockdowns Paved the Way For A Generative Future

Jeremy Hudson, Moody’s

When COVID-19 lockdowns swept the globe in 2020, businesses faced a stark reality: adapt or be made obsolete. For banks, survival meant embracing digital transformation at an unprecedented pace. Confronted with a sudden need to serve customers remotely and safeguard operations, banks were forced to accelerate investments in technology.

When COVID-19 lockdowns swept the globe in 2020, businesses faced a stark reality: adapt or be made obsolete. For banks, survival meant embracing digital transformation at an unprecedented pace. Confronted with a sudden need to serve customers remotely and safeguard operations, banks were forced to accelerate investments in technology.

That digitization has become a defining force in banking performance worldwide. In the US, for instance, Moody’s Ratings’ Q2 2024 banking update highlights how large banks have improved operating leverage, a trend that suggests digital investment is yielding returns. Regional US banks, where net interest income remains a primary revenue driver, have also seen some relief from net interest margin compression, potentially reflecting the long-term impact of their digital strategies.

What began as a crisis-driven response has since evolved into a broader recalibration of banking’s relationship with technological innovation. Having built out digital infrastructure at speed, banks now had the proper scaffolding to leverage AI in ways previously considered still years—if not decades—into the future.

Today, AI is quickly becoming embedded in financial services. Moody’s Ratings 2025 global outlook for banks recently shifted from negative to stable, helped in part by an “improved application of technology”. The outlook also notes strong profitability for 2025, as banks “increase use of new technologies, including generative artificial intelligence”. Financial institutions that embraced digitalization during the pandemic are now poised to reap larger benefits, emerging leaner, more efficient, and better positioned for both sustained profitability and innovative growth. Read more

U.S. Senate’s Banking Chair Pushes Debanking Bill After Crypto Uproar

Jesse Hamilton, CoinDesk

Senator Tim Scott, the chief of the banking committee, is backing a bill to stop U.S. regulators from citing “reputational risk” as a reason to block clients.

Senator Tim Scott, the chief of the banking committee, is backing a bill to stop U.S. regulators from citing “reputational risk” as a reason to block clients.

What to know:

- Senator Tim Scott, the chairman of the Senate Banking Committee, is championing legislation that would end banking regulators’ use of “reputational risk” when weighing a bank’s business choices.

- The crypto industry has objected to regulators pressuring banks to drop customers seen as overly risky, even if their businesses operated within the law.

The industry’s ongoing campaign against the debanking of crypto businesses and leaders has secured a legislative push from a top U.S. senator, Tim Scott, who is championing a bill that would cut out federal banking regulators’ ability to use “reputational risk” as a reason to steer banks away from customers.

That practice had been cited by Republicans as a problem area in recent congressional hearings, which examined how digital assets businesses had been systematically cut out of U.S. banking relationships because of perceptions that the regulators — including the Federal Reserve, Federal Deposit Insurance Corp. and the Office of the Comptroller of the Currency — didn’t want them there. Read more

Beyond AI Regulation: How Government and Industry Can Team Up to Make the Technology Safer Without Hindering Innovation

Paulo Carvão, Harvard Kennedy School/The Conversation

Imagine a not-too-distant future where you let an intelligent robot manage your finances. It knows everything about you.

Imagine a not-too-distant future where you let an intelligent robot manage your finances. It knows everything about you.

It follows your moves, analyzes markets, adapts to your goals and invests faster and smarter than you can. Your investments soar. But then one day, you wake up to a nightmare: Your savings have been transferred to a rogue state, and they’re gone.

You seek remedies and justice but find none. Who’s to blame? The robot’s developer? The artificial intelligence company behind the robot’s “brain”? The bank that approved the transactions? Lawsuits fly, fingers point, and your lawyer searches for precedents, but finds none. Meanwhile, you’ve lost everything.

This is not the doomsday scenario of human extinction that some people in the AI field have warned could arise from the technology. It is a more realistic one and, in some cases, already present. AI systems are already making life-altering decisions for many people, in areas ranging from education to hiring and law enforcement. Health insurance companies have used AI tools to determine whether to cover patients’ medical procedures. People have been arrested based on faulty matches by facial recognition algorithms.

By bringing government and industry together to develop policy solutions, it is possible to reduce these risks and future ones. I am a former IBM executive with decades of experience in digital transformation and AI. I now focus on tech policy as a senior fellow at Harvard Kennedy School’s Mossavar-Rahmani Center for Business and Government. I also advise tech startups and invest in venture capital. Read more

PODCAST Lawfare Daily: Carla Reyes and Drew Hinkes on the Evolution and Future of Crypto Policy

Carla Reyes, Associate Professor of Law at SMU Dedman School of Law, and Drew Hinkes, a Partner at Winston & Strawn with a practice focused on digital assets and advising financial services clients, join Kevin Frazier, Contributing Editor at Lawfare, to discuss the latest in cryptocurrency policy. The trio review the evolution of crypto-related policy since the Obama era, discuss the veracity of dominant crypto narratives, and explore what’s next from the Trump administration on this complex, evolving topic.

Carla Reyes, Associate Professor of Law at SMU Dedman School of Law, and Drew Hinkes, a Partner at Winston & Strawn with a practice focused on digital assets and advising financial services clients, join Kevin Frazier, Contributing Editor at Lawfare, to discuss the latest in cryptocurrency policy. The trio review the evolution of crypto-related policy since the Obama era, discuss the veracity of dominant crypto narratives, and explore what’s next from the Trump administration on this complex, evolving topic.

Read more:

- TRM Labs 2025 Crypto Crime Report: https://www.trmlabs.com/2025-crypto-crime-report

- 2023 FDIC National Survey of Unbanked and Underbanked Households: https://www.fdic.gov/household-survey

Mar. 7, 2025: AI & Digital Assets

- How the Biggest Crypto Heist in History Went Down

- Reality Check: Is AI’s Promise to Deliver Competitive Advantage a Dangerous Mirage?

- The Titans Battling for Control of the Crypto Future

- S.E.C. Declares Memecoins Are Not Subject to Oversight

How the Biggest Crypto Heist in History Went Down

The cryptocurrency exchange Bybit lost $1.5 billion to North Korean hackers last month — and it all traced back to an account on a free digital storage service.

The cryptocurrency exchange Bybit lost $1.5 billion to North Korean hackers last month — and it all traced back to an account on a free digital storage service.

David Yaffe-Bellany, New York Times

On the night of Feb. 21, Ben Zhou, the chief executive of the cryptocurrency exchange Bybit, logged on to his computer to approve what appeared to be a routine transaction. His company was moving a large amount of Ether, a popular digital currency, from one account to another.

Thirty minutes later, Mr. Zhou got a call from Bybit’s chief financial officer. In a trembling voice, the executive told Mr. Zhou that their system had been hacked.

“All of the Ethereum is gone,” he said.

When Mr. Zhou approved the transaction, he had inadvertently handed control of an account to hackers backed by the North Korean government, according to the F.B.I. They stole $1.5 billion in cryptocurrencies, the largest heist in the industry’s history.

To pull off the astonishing breach, the hackers exploited a simple flaw in Bybit’s security: its reliance on a free software product. They penetrated Bybit by manipulating a publicly available system that the exchange used to safeguard hundreds of millions of dollars in customer deposits. For years, Bybit had relied on the storage software, developed by a technology provider called Safe, even as other security firms sold more specialized tools for businesses. Read more

Reality Check: Is AI’s Promise to Deliver Competitive Advantage a Dangerous Mirage?

Matt Doffing, The Financial Brand

Banking executives must plan for an uncomfortable truth: Every bank has access to the same AI capabilities through their core and digital banking vendors.

Banking executives must plan for an uncomfortable truth: Every bank has access to the same AI capabilities through their core and digital banking vendors.

Banking already is an homogenized product. So what happens when AI tools make our banks’ products even more commoditized and undifferentiated?

At a recent bank board meeting, Jason Henrichs, Chief Executive Officer at Alloy Labs Alliance, prompted the AI platform, Claude, with the following: I’m giving a presentation to the board of a bank company about AI. What question is no one going to ask but should?

Claude responded, “Given your background in banking and fintech, let me offer a provocative but critical question that often goes unasked in board presentations about AI: What happens when AI makes our bank’s products completely commoditized and undifferentiated?”

Henrichs shared Claude’s suggestions recently on LinkedIn prompting insightful discussion from bankers, fintech leaders, thought leaders, and even from Claude.

Commoditization Fallout?

What happens when AI makes our bank’s products completely commoditized and undifferentiated? It’s not a defeatist question for the industry. Instead, it suggests a shortcoming in bank and credit union strategic planning about AI, Henrichs says. Read more

The Titans Battling for Control of the Crypto Future

Angus Berwick, Wall Street Journal

Trump’s call to bring crypto into the mainstream has raised the stakes in a kill-or-be-killed battle between an iconoclastic Italian billionaire and his almost perfect American foil

Trump’s call to bring crypto into the mainstream has raised the stakes in a kill-or-be-killed battle between an iconoclastic Italian billionaire and his almost perfect American foil

Giancarlo Devasini, one of the world’s newest billionaires, leads a reclusive life in this Alpine town. He stays in a modest apartment by the lake, strolls the cobbled streets with a black hoodie pulled over his head—and rages about the American rival he believes is trying to kill his business.

Devasini is the main owner of Tether, whose eponymous digital dollar is an indispensable part of the cryptocurrency industry. Tether’s centrality has earned Devasini tremendous wealth and vast influence over the sector, and the support of a top ally of President Trump.

Critics say Tether has become the tool of choice for criminal groups to spirit money around the globe.

Out to disrupt his business empire is Devasini’s almost perfect foil, Jeremy Allaire, founder of Tether’s archrival, Circle, which issues its own so-called stablecoin, called USD Coin, or USDC. Allaire, a suit-wearing executive as comfortable in Davos as he is on Wall Street or the halls of Congress, is running a campaign to regulate Tether out of existence.

Devasini has told business associates that Circle is bad-mouthing Tether to politicians and whipping up enforcement actions against his company. They said that in his view, Circle wants to turn the industry into just another regulated corner of finance, while Devasini wants crypto to stay true to its swashbuckling, antiestablishment roots. Read more

S.E.C. Declares Memecoins Are Not Subject to Oversight

Matthew Goldstein, New York Times

The agency said the novelty digital assets were not securities, a month after President Trump issued his own memecoin.

The agency said the novelty digital assets were not securities, a month after President Trump issued his own memecoin.

The Securities and Exchange Commission said on Thursday that so-called memecoins — novelty digital assets — are not subject to regulatory oversight because they are not considered securities.

The determination could have big ramifications for the crypto industry and President Trump, who issued his own memecoin days before his inauguration.

The S.E.C.’s policy on memecoins is consistent with the light regulatory approach that Mr. Trump promised to take toward the crypto industry during his campaign.

Mr. Trump and his family firmly embraced digital currencies last year by teaming up with a new digital assets company, World Liberty Financial. The memecoin the president introduced during pre-inaugural festivities in January, called $Trump, spurred controversy because it swung wildly in value and generated hefty trading fees for Mr. Trump. Read more

Feb. 28, 2025: AI & Digital Assets

- Meme Coins: What You Need to Know About the Wild Corner of the Crypto Market

- The Rise of Artificial Intelligence at JPMorgan

- U.S. AI Safety Institute Could Face Big Cuts

- How AI Will End the Era of Risking It All to Modernize

Meme Coins: What You Need to Know About the Wild Corner of the Crypto Market

Nora Redmond, Business Insider

President Donald Trump’s support for cryptocurrencies has sparked renewed interest in digital currencies. The former crypto critic has vowed to make the US the world’s “crypto capital” — and even launched his own “meme coin” called $Trump.

President Donald Trump’s support for cryptocurrencies has sparked renewed interest in digital currencies. The former crypto critic has vowed to make the US the world’s “crypto capital” — and even launched his own “meme coin” called $Trump.

What are meme coins?

Meme coins are cryptocurrencies often based on internet jokes or fads and often have fun logos or are associated with animals or characters. Personalities have also jumped on the bandwagon to launch their own tokens, including singer Jason Derulo, Caitlyn Jenner, boxer Floyd Mayweather Jr — and Trump himself.

Perhaps the best-known meme coin is dogecoin, the Shiba Inu dog-themed coin that Trump’s close ally Elon Musk has long hyped. Dogecoin, a likely influence for the name of Musk’s Department of Government Efficiency, surged soon after the election but is down about 40% over the past month.

Along with other digital currencies such as bitcoin, meme coins are based on blockchains — most often Solana.

Meme coins are usually very volatile and can experience rapid price swings. Coins generally start with prices of one cent or less, meaning percentage changes can be significant. They are also sometimes linked to scammers and “rug pulls,” when promoters attract buyers and then stop trading before the coin crashes, allowing them to pocket some of the proceeds.

Nicolai Sondergaard, research analyst at multichain analytics platform Nansen, told Business Insider that meme coins were not inherently risky investments. “What makes them dangerous, however, is the people launching them with nefarious intentions and not playing fair,” he said. “This is how we end up seeing the many issues of tokens being launched, quickly rising several hundred if not thousands of per cent, only to quickly dump on the majority.” Read more

The Rise of Artificial Intelligence at JPMorgan

Alexander Saeedy, Wall Street Journal

Teresa Heitsenrether, overseeing the AI rollout at America’s largest bank, on what it means for thousands of employees and millions of Chase Bank customers

Teresa Heitsenrether, overseeing the AI rollout at America’s largest bank, on what it means for thousands of employees and millions of Chase Bank customers

America’s banks have been using artificial intelligence to spot fraud for years. JPMorgan Chase, the country’s biggest bank, is now making a bigger bet on AI, working to put it at the center of how its 300,000 employees work. In the past year, JPMorgan rolled out a tool it calls LLM Suite for most of its employees that allows them to use generative artificial intelligence from OpenAI and others. It’s also adding generative AI in call centers for agents that deal with Chase Bank customers.

Teresa Heitsenrether, JPMorgan’s chief data and analytics officer, was tapped to oversee the firm’s AI strategy in 2023. She isn’t a software engineer or a technologist. But after more than 20 years running key businesses at JPMorgan, she was able to identify where the firm can best use technology to boost productivity—and had earned the trust of the company’s leaders.